Artificial Intelligence and the Rise of Economic Inequality

Note: This article was originally posted on Medium.com via Towards Data Science. However, I’m reposting on my blog to preseve the text. Unfortunately, images may not transfer over so well…

Technology has played a key role in the United States labor market for centuries, enabling workers to carry out their daily tasks in a much more efficient manner. This increase in productivity, with the aid of technological advances, has led the United States to become one of the strongest economies in the world, regularly creating thousands of jobs and keeping a large plurality of the country employed. However, technological advances have also caused many workers to be displaced from their jobs as organizations have sought to reduce employment costs with increased usage of automation to replace low-skilled jobs (i.e. jobs that required manual labor and could easily be replaced by machines).

](https://cdn-images-1.medium.com/max/2516/1*uTuEJ0Ip3Y9bfAvXtrYvog.png) https://www.minnpost.com/sites/default/files/images/articles/distoflaborforcebysector.png

https://www.minnpost.com/sites/default/files/images/articles/distoflaborforcebysector.png

For example, agriculture employed almost 50 percent of American employees in 1870. However, according to a Bureau of Labor Statics Report, the agriculture industry uses less than 2 percent of the nation’s workforce as of 2015.[1] Though this small bastion of agricultural workers now produces food for many more individuals in the United States and even the global population, their share of the United States workforce has significantly decreased, illustrating the “magnitude of what technological displacement can do.”[2]

The industry of agriculture is not alone. Many more advanced forms of technology have come into play with the US workforce, automating even more labor-intensive jobs and breaking its way into automating low-skill jobs such as cashiers, switchboard operators, and bank tellers. Recently, a new form of technology has begun to take hold in the marketplace: Artificial Intelligence. As its name would imply, these are computer programs that are capable of mimicking human thought and performing tasks that are near impossible to process in a stepwise manner. These tasks include image recognition, trend analysis, detecting medical conditions, and so much more. This newer form of technology can essentially do what humans can do if given enough inputs and expected outputs (similar to how a person learns a particular skill, i.e. through trial and error on a set number of example cases).

Artificial Intelligence (AI) poses a more immediate problem for a continually threatened part of the workforce, low-skill and uneducated workers. Currently, much of the literature on AI’s effect on the US workforce remains largely speculative since companies are only starting to roll out forms of this new technology in their regular operations (and thus it is not possible to observe the long-term effects of AI on the workforce). However, based on historical trends and the current capabilities of AI, it is entirely possible that the rise of artificial intelligence will lead to the displacement of entry-level and low-skill jobs (i.e. jobs that do not require significant training or education), creating a larger dichotomy between specialized and the unspecialized workers in modern society. To explore all aspects of this problem, this post will focus on three main sections, namely defining what AI is and its current capabilities, reviewing the previous effects of technological advancements in the US labor force, and lastly extrapolating the potential future effects of artificial intelligence on the US workforce and society.

Defining AI and Its Current Capabilities

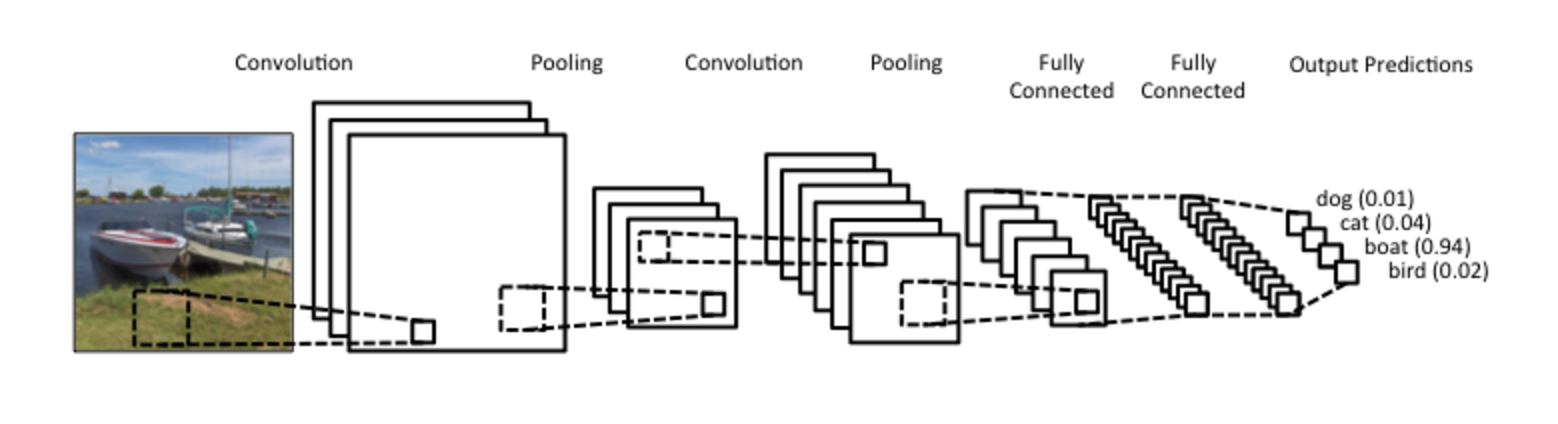

](https://cdn-images-1.medium.com/max/5712/1*AcgQC7xVkC5NQKnDp7Inpw.png) A basic convolutional neural network: http://d3kbpzbmcynnmx.cloudfront.net/wp-content/uploads/2015/11/Screen-Shot-2015-11-07-at-7.26.20-AM.png

A basic convolutional neural network: http://d3kbpzbmcynnmx.cloudfront.net/wp-content/uploads/2015/11/Screen-Shot-2015-11-07-at-7.26.20-AM.png

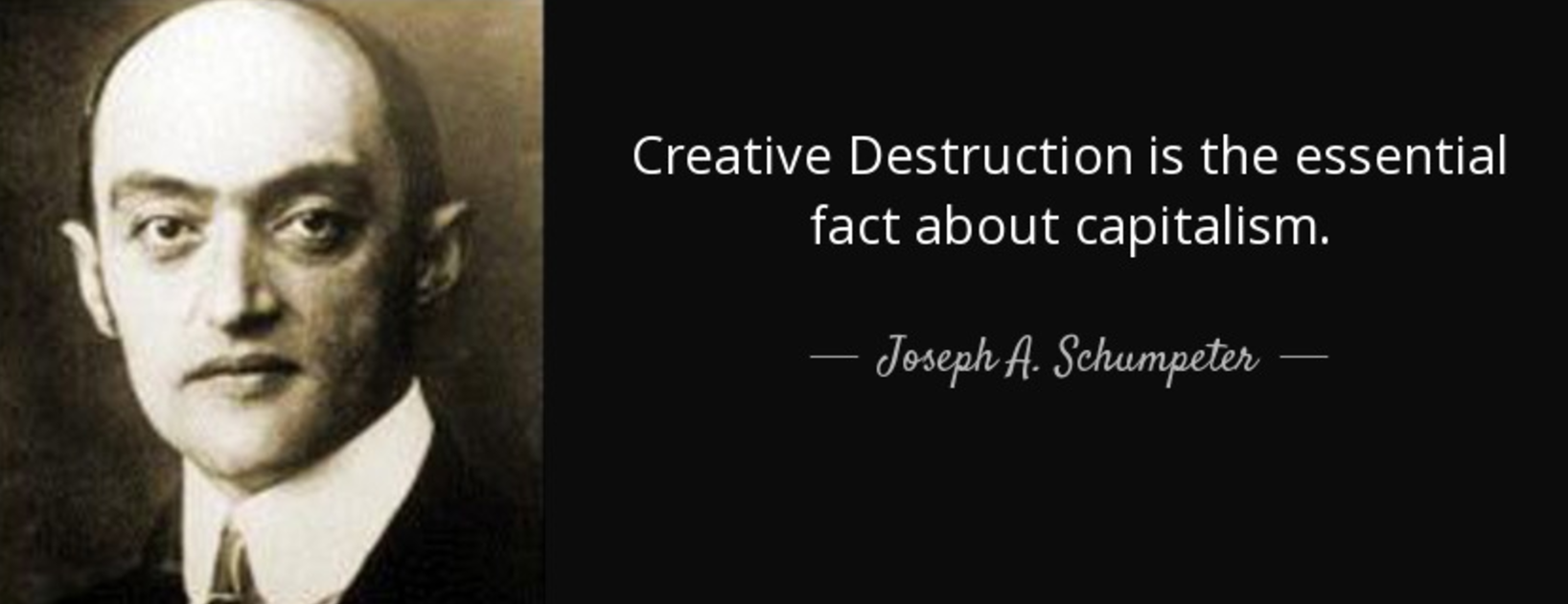

Before diving into AI, it is important to establish an understanding about the state of programming and automation before AI’s development. Computers are excellent at executing a set of instructions and programmers are the ones who often codify those instructions in the form of a program. These programs, when run on a computer, are superb at doing what they are told to do. For example, a standard program many computer science students write is to generate the first n Fibonacci numbers (0, 1, 1, 2, 3, 5, 8, 13, 21 …) where each successive number is the sum of the two numbers before it (after 0 and 1). A programmer can write a simple 6-line version of this program that can produce the first 30,662 Fibonacci numbers within 10 seconds.

But if there were a more complicated task, such as one that involves something along the lines of object detection and pattern recognition, a simple sequential program would not be enough.

Consider, for example, the problem of detecting whether an image contains a bird or not. For humans, this is easy; we have seen enough birds in our lives to know that if something has a beak, beady eyes, feathers, and wings, it is most likely a bird.

For a computer, however, this is tough because a computer only “sees” an image on a pixel by pixel basis and cannot usually draw connections between pixels to form a definition of a general object. From the perspective of a programmer, it is a tough task to program a computer with the knowledge of how to identify a bird. Perhaps this approach would involve telling the computer to look for parts of the image that are a particular color and conform to the general shape of a bird’s body, has a triangular beak that is another color, and has two black circles for its eyes. But these criteria do not encompass all different types of birds. Moreover, if any part of the image of the bird is occluded, the computer would fail at its task.

This point is where AI comes in. Artificial intelligence (specifically a subset called neural networks) can “learn” in a fashion similar to humans through statistical analysis and optimization based on training data it receives. If a developer can create an AI (composed of artificial neurons that take in input and produce a trained output), this program can then use thousands of images that do and do not contain birds to train itself. From that point, the trained network can then create a model that can accurately predict whether a bird is or is not in an image, even if it hasn’t seen that picture before. In fact, developers at Flickr did nearly the same task and achieved a stunning accuracy rate.[3],[4]

](https://cdn-images-1.medium.com/max/3032/1*ee4BtoFRDrji3LS71u4LXQ.png) http://code.flickr.net/2014/10/20/introducing-flickr-park-or-bird/

http://code.flickr.net/2014/10/20/introducing-flickr-park-or-bird/

But AI can do much more than just detect whether birds are in or not in a picture. They can also identify objects from a camera mounted on a car on the road and instruct that car to move accordingly to avoid obstacles and obey street signs, forming the basis for self-driving cars. Companies such as Uber, the popular ride-sharing startup, have taken this idea further and have started to deploy self-driving cars that only need engineers to monitor vehicles instead of having regular drivers operate them.

Furthermore, AI can help in life-critical applications in the medical field, aiding radiologists in the process of diagnosing tumors by catching them on MRI scans before they manifest themselves significantly, sometimes even before doctors can see the changes.

](https://cdn-images-1.medium.com/max/3336/1*liVBvn9QutQuGchG6F9kuw.png) Automatic detection of cerebral microbleeds from MR Images via 3D Convolutional Neural Networks http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7403984&tag=1

Automatic detection of cerebral microbleeds from MR Images via 3D Convolutional Neural Networks http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7403984&tag=1

Variants of AI known as Long Short-Term Memory Recurrent Neural Networks can even do more creative tasks such as generating music in the style of one or multiple artists.[5] This same form of AI has also been used to train 24/7 virtual customer service representatives for companies. These online assistants never tire and learn from the collective experiences of millions of conversations, questions, and issues customers have faced before.

In short, artificial intelligence has started to accomplish tasks only thought to be feasible for humans alone, and in some cases, AI can beat people at those tasks, if given enough training data. Though the process of creating an AI is very resource-intensive, the decreasing price of hardware and the development of better algorithms are making it easier for even regular laptop owners to create an AI on their computer in as little as half an hour. It is clear that officials need to address the force of AI in the workforce in the coming future. Researchers have already proven its abilities, and the technology is finally catching up to allow regular consumers to create and use AI at little to no cost.

Previous Technological Advancements in the Workforce

](https://cdn-images-1.medium.com/max/2000/1*CzhfNM93y0tzxNYYn8dVvw.png) Luddite destroying machine http://www.economicshelp.org/blog/6717/economics/the-luddite-fallacy/

Luddite destroying machine http://www.economicshelp.org/blog/6717/economics/the-luddite-fallacy/

Historically, the integration of new technologies in the workforce initially replaces several forms of jobs but opens the avenue to new types of jobs in other areas.

The classic example of this pattern is the Luddite protests of new textile technologies in the 19th century. In 1811, a group of British weavers and textile workers led a protest against the recent invention and implementation of the automated loom and knitting frame in textile factories. These individuals, now termed the Luddites, first gathered in Nottingham and destroyed the machine that robbed them of their jobs as artisans and specialized laborers. The Luddite movement spread across the region surrounding Nottingham, and people continued to hold these machine-breaking protests until 1816 until the English government was forced to suppress any further revolts from the group.

Now, people use the term “Luddite” to describe an individual who is opposed to industrialization, automation, or any form of new technology. We know that the Luddites were resisting a change that would form the basis for the industrial revolution and the birth of the factory system, which would employ millions of individuals and avoided the need for exhaustive specialized labor for menial tasks. But as new technological advancements come along, how is it possible to know whether there will be replacement jobs to aid those who have been displaced by the technology itself? The only way this question can be answered is first to take a look at the previous trends and extrapolate from there.

The 19th century was marked by a change in technology that reduced the need for specialized and higher-skilled workers, much like the case of the Luddites, resulting in unskilled-biased technical change. This technological trend switched in the late 20th century with the advent of computational power being available to the consumer to use and the internet. This change led towards the productivity and well-being of skilled individuals resulting in skill-biased technical change.

White House report on AI

White House report on AI

The White House Report on Artificial Intelligence, Automation, and the Economy goes into several examples of how this change is manifesting itself, noting the decline in manufacturing jobs resulting in weak labor demand for less educated workers. However, there has been an increased amount of opportunities for “those engaged in abstract thinking, creative ability, and problem-solving skills” as technology continues to be integrated into the workplace.[6]

In the case of computational automation of manufacturing jobs, companies have decreasing incentives to include smaller factories in more remote areas with human staff when they can close those plants and relocate to larger centralized facilities. In one particular instance, researchers identified several industrial facilities that chose to replace manual laborers with machines. After following the employment patterns of those displaced workers over ten years, they found that the earnings of the workers remained depressed by 11% relative to their previous wages.[7] This finding suggests that many displaced workers have a deteriorated ability to match their current skills to, or retrain for, new, in-demand jobs. However, even if workers can maintain employment in other jobs, there remains significant doubts as to whether there will be jobs available for these displaced individuals.

)](https://cdn-images-1.medium.com/max/3236/1*JGPPD-qnHMmOSQJMq_9TRg.png) Machines for a car assembly line (src: http://www.xiduanpress.com/wp-content/uploads/2016/01/Assembly-Line.png)

Machines for a car assembly line (src: http://www.xiduanpress.com/wp-content/uploads/2016/01/Assembly-Line.png)

Throughout history, many developed economies have followed the trend of “creative destruction,” a term coined by 20th-century economist Joseph Schumpeter that describes the phenomena of “new production units replac[ing] outdated ones.”[8] Researchers have proven the existence of this trend several times, most significantly by Davis, Haltiwanger, and Schuh (DHS) who found that within a given year from 1972–1988 10% of jobs were eliminated but all replaced with new jobs.[9] Foster, Haltiwanger, and Krizan followed this study in 2001 with evidence stating that these job destruction and creation cycles are most directly attributable to technology (after they had performed an industry by industry breakdown of DHS’s data).[10] However, Randall Collins points out that economists who tend to adopt a Schumpeter-inspired view towards the effect of new technologies on the workforce are “rely[ing] on nothing more than extrapolation of past trends for the argument that the number of jobs created by new products will make up for the jobs lost by destruction of old markets.”[11] Previous economic data does support Schumpeter’s theory to date, but Schumpeter himself ideated “creative destruction” during a time when no such idea of a computer even existed. Only very recently has there been a significant amount of computerization of the workforce that has actively threatened to replace specialized human workers altogether.

Creative Destruction Quote Schumpeter “Creative Destruction is the essential fact about capitalism”

Creative Destruction Quote Schumpeter “Creative Destruction is the essential fact about capitalism”

Collins also expands on his point stating that the new jobs created by the integration of automation in the workplace require more specialization and formal education. These jobs include IT services, website development, and consulting services. Collins explains his earlier point about the inaccessibility of newer jobs for the uneducated, noting the recent rise in demand for job-retraining programs to train non-specialized workers with a new skillset. However, there is an absolute limit at which it is no longer possible for many people to be specialized in a particular skillset. For example, software engineering, a job that only came into existence with the invention of programming languages, requires at least four years of training at a formal university. Effectively, the Schumpeterian view cannot be taken as truthful in a society that is becoming more and more automated because previous trends hold no bearing on what will happen in the future. Artificial Intelligence may be able to create new industries, but whether those industries can offer a viable job prospect for the displaced has yet to be seen.

Predictions of Artificial Intelligence in the Workforce

Artificial Intelligence poses a large threat to the current state of the economy; however, most of the consequences of introducing this new form of technology into the marketplace are not yet known. The main issue of debate is whether a skill-biased technical change will continue under the scope of companies choosing to integrate an AI into the workforce.

Carl Frey and Michael Osbourn from Oxford University posit that AI technologies can replace nearly 47% of US jobs.[12] Furthermore, the Organization for Economic Cooperation and Development highlighted target area risks for types of employee populations that are most likely to be significantly aided by automation shortly, noting that 40% of workers with a high school degree or less will face some form of unemployment by technological advancements in the coming decade. This displacement of jobs may leave few options for these individuals to specialize. However, there are multiple issues with regards to trying to educate and train a significant portion of the United States workforce to specialize in fields so they may have favorable employment prospects in the future.

While in the past, it was entirely probable for a subset of individuals to switch from one low-skill job to another with minimal training (as seen with work education programs during the Great Depression), it is somewhat unreasonable for 47% of the population to receive further university-level education. The only way that this may be accomplishable would be with the aid of significant government funding. Collins notes that as “the amount of job creation for humans compared to work by computers steadily decrease[s],” it would be necessary for the government to support a large unemployed population.[13] The United States has solved issues of mass unemployment with a Keynesian welfare-state implemented in the first half of the 1900s to preserve a system of capitalism, albeit government-backed and controlled. On the policy side, the government can place taxes upon companies to hire human workers over implementing AI, though this measure would be a temporary fix as the cost of AI decreases over time.

In the situation of mass unemployment, the implementation of a universal basic income may be a solution towards alleviating issues in the society. However, this approach, according to Collins, opens even developed nations to a “fiscal crisis of the state and a split between the rich and those dependent on the government for basic sustenance.”[14] This dichotomy in society would most likely be “larger than anything seen in current capitalistic markets,” especially when the reliant population is not able to maintain employment. Furthermore, this system removes buying power from the reliant individual “while undercutting consumer markets and making capitalism unsustainable.”[15]

)](https://cdn-images-1.medium.com/max/3308/1*pELJjz8JJg9nFnyKP1ZBWA.png) Inequality visualized (src: https://s3.amazonaws.com/blog.oxfamamerica.org/politicsofpoverty/2015/04/Inequality-014.jpg)

Inequality visualized (src: https://s3.amazonaws.com/blog.oxfamamerica.org/politicsofpoverty/2015/04/Inequality-014.jpg)

Collins makes it very clear that there cannot be a form of a capitalist economy as it is known today in the United States should the use of AI cause massive unemployment. A universal basic income, while an excellent means of establishing a system of living for people throughout society, fails to maintain the current economic system and even further drives a wage gap between two segments of society, those who haven’t had their jobs replaced by AI yet and those who already have. Evidence for this extrapolation can be seen with the current state of the welfare system in the United States. Among individuals who are unemployed for extended periods of time, it is much less likely for them to ween off welfare, leading to reduced buying power for these individuals and lower economic status compared to their employed peers.[16] The growth of unemployment caused by artificial intelligence in the workforce could act as a driving force for unemployment and resulting dependence on governmental programs for aid. This dependence could then foster greater economic inequality between those who are unemployed and those whose jobs are too specialized for AI to replace.

However, the view that AI will cause mass unemployment is not the only potential viewpoint. David Autor suggests that, after the integration of AI, there may be two populations of individuals who will grow in economic prosperity: the people who own corporations and do specialized labor, and workers who interact with humans on a daily basis (e.g. nurses). His belief is that “Even if an [AI] can handle complicated patterns and analyze much more data than a human ever could accurately, it lacks in the ability to communicate with other people and explain its decision-making process.”[17] This view of AI’s role in the workplace would allow for a reasonable degree of upward mobility and allow for the possibility of the maintenance of a middle class and a capitalist society as it stands.

Of course, both Collins’ and Autor’s views towards AI’s role in the workplace will vary based on several factors regarding the technology’s role out in the workforce, but a crucial point to address is how to maintain some semblance of the current class structure that exists in the United States in the face of AI.

Conclusions

If one can gather anything from this paper, it is the following: Artificial Intelligence poses a threat to the current structure of the workforce known today. However, many can only theorize the effects of this peril. In the case of Collins’ view on AI, the threat of job replacement by AI could cause a disastrous dichotomy between displaced workers and the those whose jobs are too specialized to be replaced, ultimately resulting in the establishment of a basic income and a larger role of government in maintaining a welfare state. Autor’s theories agree with the truth that AI will replace jobs of low-skill laborers, but predicts that jobs reliant on significant human interaction will rise instead, giving an opportunity for those displaced by AI to retrain. Based on historical trends, it is clear that new industries of work can be created by technology, serving as a new source of jobs to replace the ones displaced by that technology itself. However, the accessibility of those jobs to displaced workers is the primary concern with regards to a market in which AI is a competitor for jobs. The combination of past historical trends and the current state of artificial intelligence indicates that it is necessary for new, accessible industries for low-skill workers to be created to avoid broad changes to the economic and societal structure seen today.

Overall, AI may serve as a threat in the short term, but it is entirely possible that the integration of AI can create numerous employment opportunities through new industries. To curb the potential effects of artificial intelligence on the economy and society, it is necessary for officials to enact policy that can educate individuals whose jobs may be threatened by AI and limit the integration of AI in susceptible industries. This technology can accomplish amazing feats on the level of humans, and it would be wrong to avoid exploring its abilities in the modern world further. However, AI must be responsibly integrated to avoid economic inequality in the near future. If introduced at the right pace, this new form of technology can aid humans in unique ways and lead to the further development of humanity from the technical, economic, and scientific perspectives among many more.

Bibliography:

[1] Employment by major industry sector. (n.d.). Retrieved April 26, 2017, from http://www.bls.gov/emp/ep_table_201.htm

[2] Collins, R. (2014). The End of Middle-Class Work: No More Escapes. In Does capitalism have a future? (pp. 39–51). New York, NY: Oxford University Press.

[3] Introducing PARK or BIRD. (2014, October 20). Retrieved April 26, 2017, from http://code.flickr.net/2014/10/20/introducing-flickr-park-or-bird/

[4] Zeiler, M. D., & Fergus, R. (2014). Visualizing and Understanding Convolutional Networks. Computer Vision — ECCV 2014 Lecture Notes in Computer Science, 818–833. doi:10.1007/978–3–319–10590–1_53

[5] Johnson, D. (2015, August 02). Composing Music With Recurrent Neural Networks. Retrieved April 26, 2017, from http://www.hexahedria.com/2015/08/03/composing-music-with-recurrent-neural-networks/

[6] Artificial Intelligence, Automation, and the Economy (pp. 8–26, Rep.). (2016). Washington, D.C.: Executive Office of the President.

[7] Davis, S. J., & Wachter, T. V. (2011). Recessions and the Costs of Job Loss. Brookings Papers on Economic Activity,2011(2), 1–72. doi:10.1353/eca.2011.0016

[8] What are creative destruction and disruption innovation? (n.d.). Creative Destruction and the Sharing Economy, 63–91. doi:10.4337/9781786433435.00008

[9] Davis, S. J., Haltiwanger, J., & Schuh, S. (1993). Small Business and Job Creation: Dissecting the Myth and Reassessing the Facts. National Bureau of Economic Research Working Paper Series, 1–49.

[10] Hulten, C. R., Dean, E. R., & Harper, M. J. (2001). New developments in productivity analysis. Chicago: University of Chicago Press.

[11] Collins 40

[12] Frey, C. B., & Osborne, M. A. (n.d.). The Future of Employment: How Susceptible Are Jobs to Computerisation. Retrieved from http://www.oxfordmartin.ox.ac.uk/downloads/academic/The_Future_of_Employment.pdf

[13] Collins 41

[14] Collins 50

[15] Collins 51

[16] Melkersson, M., & Saarela, J. (2004). Welfare Participation and Welfare Dependence among the Unemployed. Journal of Population Economics,17(3), 409–431. Retrieved from http://www.jstor.org/stable/20007919

[17] Autor, D. (2014). Polanyi’s Paradox and the Shape of Employment Growth. Cambridge, Mass: National Bureau of Economic Research.